Earlier this year we wrote an article with some background on, and the potential positives and negatives of the fast-evolving capabilities of artificial intelligence on our industry.

Since then, over the past few months we have seen increased engagement with AI chat bots such as Google Bard, Microsoft’s AI-powered Copilot and ChatGPT here in Australia. The foundation of these bots are large language models (LLMs), which are algorithms that can process natural language inputs and then predict the next word based on what it has already seen. The bots will then continuously predict the next words in their responses until its answers are complete.

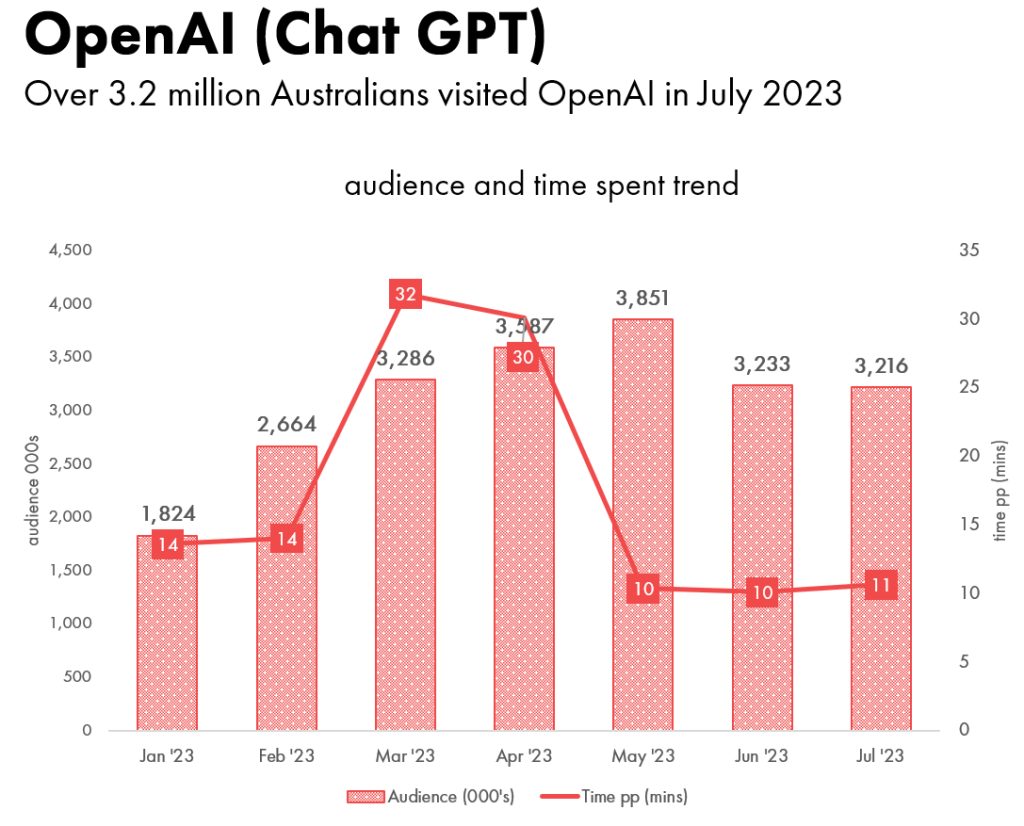

We have been tracking some of the consumer engagements with ChatGPT in the monthly Ipsos Iris reports, as per the below:

image source: Ipsos Iris

image source: Ipsos Iris

In terms of impact on our industry related to efficiency and effectiveness it’s still too soon to truly tell and we do know that the capabilities of machine learning have been leveraged for a number of years now – as per our recent submission to the Department of Industry Science and Resources’ consultation on Safe and Responsible AI.

As a follow-up to the original AI article, this week we spoke to Andy Houston – who is the Chief Commercial Officer at Crimtan, about some of his thoughts on this increasingly important topic.

The traditional digital media ad models rely upon verifiable human attention to succeed. How could AI potentially affect or evolve current monetisation models for buyers and/or sellers?

We’re still discovering the various opportunities, dangers and limitations of AI, and human attention is still a critical tool in any ad model.

At the very least, humans are still needed to verify and approve ad campaigns in order to succeed.

But the idea of AI optimising and monetising opportunities isn’t anything new. We’re already doing that in programmatic, and have been for some time. Crimtan’s technology stack processes over a million ad opportunities per second, and based on various predictive models, will choose the most effective and optimised direction. AI will just continue enhancing this method.

We must remember though that AI is only one arm of data science, and so we shouldn’t put too much weight on this alone.

Just because AI is the latest industry buzzword doesn’t mean non-linear series and other attribution modelling can’t produce similar or better efficiencies.

We’re excited to see how AI continues to evolve processes, but we will also continue investing in other data science avenues, to ensure our predictive and programmatic methods are as robust and effective as humanly (and artificially) possible.

What considerations are there related to mitigating any commercials, privacy and/or legal risks when leveraging the potential capabilities of AI for marketing purposes?

There are two considerations when it comes to privacy and AI; protecting your own business’ private and sensitive data and upholding your customers’ right to privacy of their own personal data. Both are equally crucial to get right.

However you collect and leverage PII and data, whether through AI programs or more traditional means of data collection and targeting, your dataset is one of your greatest assets.

Implementing private AI libraries will ensure that your data remains your own, and your confidential commercial data won’t be used in other public AI arenas. And while it’s tempting to instruct AI to grow your database as much as possible, it’s still your responsibility to protect your customers’ privacy.

We all know AI systems have the capability to collect and analyse vast amounts of data. And as this becomes more advanced, AI can make decisions based on subtle patterns in data that are difficult for even humans to detect. This means that customers may not be aware how their personal data is being used to make decisions that affect them, raising the question of whether it is ethical to target people in this way. What’s more, AI is only as unbiased as the data it’s trained on, so if human inputs are biassed, the outputs become biased too.

As AI continues to become more prevalent in our lives, marketers must continue to uphold privacy standards and ensure this emerging technology is used in an ethical, responsible way. How do we do this?

Most current AI tools are currently a black box; we understand the data that’s inputted and we can see the data that is outputted, but how AI gets from A-B is often unknown. AI can also ‘lie’ when driven to a sole focus of a particular outcome.

So you should leverage and maximise the possibilities of AI, whilst keeping the necessary safeguards, checks and balances to ensure you’re still doing your due diligence, including the applicable regulations (APA 1988 and GDPR), just like you would when handling any other data.

The release of ‘question and answer’ AI tools has also meant several breaches in confidentiality of personal data. Public facing AI systems are set up to ingest all data they receive, so in inexperienced hands sensitive customer data could be exposed or stolen. And in the same way you wouldn’t upload your commercial data to your company’s website for anyone to see, don’t submit sensitive data into an AI, either. Adhere to existing data privacy policies and limit the access external actors have to your business information to ensure you remain compliant as you integrate AI into your marketing strategies.

Data usage and submission to AI applications should follow all the same data security protocols as PCI compliance for payment information or ISO27001 for information security management. You should already have the protections in place to protect existing data from cyber breaches of any kind, so make sure these cover the risks for data exposed in AI applications too.