Written by Danny Tyrrell, Co-Founder and Chief Operating and Product Officer, DataCo Technologies.

While Google may have recently delayed its full deprecation of third-party cookies, there is still concern within the digital marketing and advertising industry on its preparedness for a cookieless future.

Secure data collaboration platforms or data clean rooms have gained traction as a key solution for re-establishing critical data connections for activation and measurement in a privacy-compliant manner.

Although many data collaboration platforms or data clean rooms tout state-of-the-art privacy-enhancing technologies, there has been an ongoing debate about whether information processed through these technologies should be considered personal information and how it will be treated under regulatory frameworks.

The majority of data clean room environments promote sophisticated encryption techniques that remove direct identifiers before being used, justifying treating data as de-identified. However, it is the nature of the outputs from these environments, and the possible capability of a user of those outputs to use them (possibly combined with other available data points), that plays the biggest role in determining if the data is in fact (and law) de-identified.

While the amendments to the Privacy Act in Australia have yet to be finalised, there is substantial information available relating to how data is used within secure collaboration environments to help organisations develop basic principles of data use and understand what the likely statutory treatment of data collaboration will be.

Current OAIC guidance states that for data to be considered de-identified, the risk of an individual being re-identified in the data needs to be very low in the relevant release context. Proposals are being considered that will include information as personal data if it ‘relates to’ an identifiable individual, or if an individual can be ‘acted upon’ (in the sense of being treated differently from others, whether alone or a member of an audience segment) regardless of whether the individual is identifiable.

Simply put, if you can receive individual record-level information that can be directly linked back to an individual once it leaves the environment, it is most likely going to be considered personal information and regulated as such, regardless of how sophisticated the original encryption techniques are.

However, use cases within these environments are not restricted to simply enriching or activating audience, so data going into these platforms cannot always be treated as personal information either.

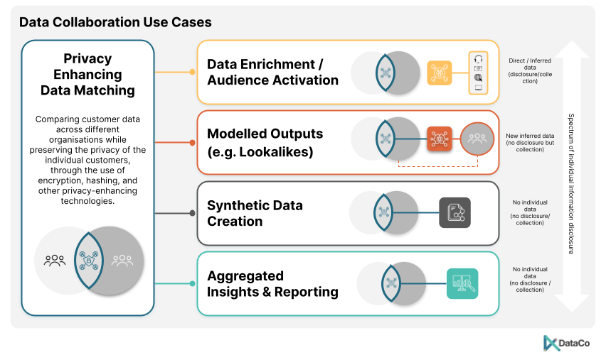

Data Collaboration Use Cases

To understand some general principles of data treatment in secure collaboration platforms further, we can explore five common use cases facilitated by these platforms.

Use Case | What it involves | Expected treatment | Key Questions to Ask |

Data Enrichment | Combining data from various sources to enhance an existing dataset with more detailed attributes. | The output includes new individual-level information and is typically treated as personal information due to its re-identifiable nature. | Do you have current, specific consent for individual’s information to be shared in this manner? Does your organisation have appropriate use, collection, and disclosure notices within their privacy policies? |

Audience Activation | Creating specific audience segments for targeted campaigns without exposing underlying data outside of the secure data environment. | Similar to enriched data, the output involves individual-level information that can be re-identified, or (under the current proposals) that enable an individual to be differentially ‘acted upon’, resulting in this likely being treated as personal information. | Does your organisation have appropriate disclosures in privacy policies and privacy (collection) notices given to affected individuals? For data types that are subject to consent requirements, do you have current, specific consent for individual’s information to be shared in this manner? |

Modelled Outputs | Combining data from different sources to create statistical models that predict behaviours or preferences. | If individual-specific scores are generated and used, these should be treated as newly generated personal information. | Is data from both sides required for scoring based on the model? Can the model single out individuals from the partner organisation? Does the receiver have the appropriate privacy notices to handle these outputs? |

Synthetic Data Creation | Generating entirely new datasets that mimic real-world statistical properties but do not relate to actual individuals. | If appropriate privacy-enhancing techniques like differential privacy are used, synthetic data can be considered de-identified. | What methods are used to ensure the synthetic data does not revert to identifiable data? Are inputs to the synthetic data process sufficiently de-identified or pseudonymised? |

Aggregated Reporting | Compiling data insights at a high level to observe market trends without individual data points. | Properly aggregated reports should be treated as de-identified data, assuming controls are in place to prevent data queries that could identify individuals. | What techniques ensure that aggregated reporting does not lead to re-identification? Are inputs adequately de-identified before entering the environment? |

Key Takeaways

While organisations must ensure they obtain the right legal advice and regularly review their privacy policies in accordance with regulatory guidance, there are some general principles they can follow to ensure their data usage remains compliant:

- Deploy Proactive Privacy Enhancing Techniques: It is critical to ensure that data is sufficiently anonymised / pseudonymised before it leaves your secure infrastructure to minimise privacy risks. Employ state-of-the-art cryptographic methods and privacy-enhancing technologies, and continually evaluate them against emerging threats.

- Control Data Use within Platforms: Ensuring collaboration platforms can constrain the use of data within the use cases context is critical to making the distinction between information being personalised or de-identified.

- Maintain Customer Transparency: Maintain clear and up-to-date data handling policies that reflect current practices and adhere to legal requirements.

- Continuously Review Privacy Assessments: As the landscape evolves, so should your privacy strategies. Conduct regular assessments to identify potential vulnerabilities and adapt processes accordingly.

Understanding and managing how data is shared and protected helps ensure compliance with laws and builds trust with individuals, ensuring the digital marketing and advertising industry can continue to create shared value throughout society.

Danny Tyrrell is the Co-Founder and Chief Operating and Product Officer of DataCo Technologies (dataco.ai), a secure data collaboration technology business for and by ANZ Bank to enable the safe and responsible use of data between organisations in a privacy-first world.

Hear more at the IAB Australia Data & Privacy Summit on Wednesday 15th May, check it out here.